Internet Accessibility Act comes into force - what it means now

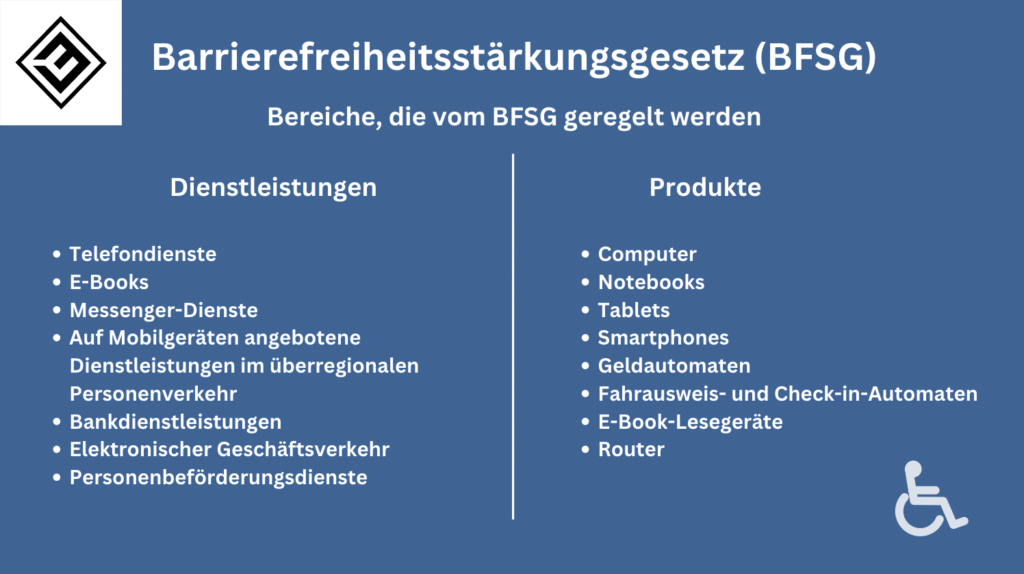

The Accessibility Act on the internet (Accessibility Strengthening Act / BFSG) has recently become mandatory and requires digital accessibility. For companies, this means that websites, online shops, apps, and digital services must be accessible. The BFSG is not just a legal obligation — it also offers opportunities. This way, companies can reach new target audiences and sustainably strengthen their brand image.

Why is the BFSG so important for companies?

The Accessibility Act aims to make digital services accessible, allowing people with disabilities to participate equally in digital life. For companies, this not only means avoiding legal risks and fines but also expanding their target audience. In addition, the user experience improves for all visitors. Digital accessibility thus becomes a competitive factor and is an essential part of modern online marketing strategies.

What is the best way for companies to start implementing accessibility?

What is an accessibility test and why is it so important?

The first step toward complying with the BFSG is a thorough accessibility audit. This involves checking whether the digital presence meets technical and content-related requirements. It’s not just about technical issues like missing alt texts or poor color contrasts. The focus is also on usability for people with different impairments. Only through a comprehensive analysis can weaknesses be effectively addressed.

What should you consider when choosing a test provider?

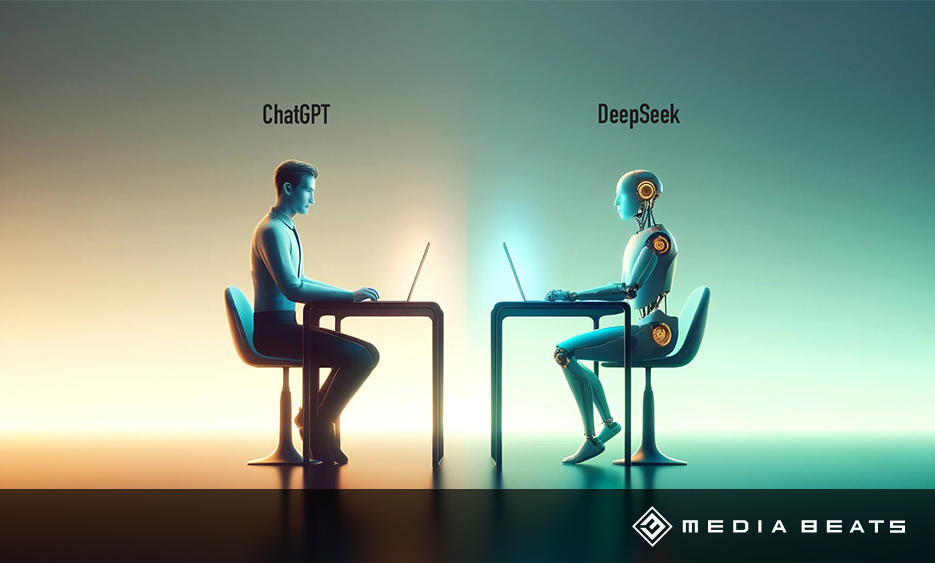

Since the Digital Accessibility Act came into force, many new service providers have entered the market. Companies should therefore choose carefully and look for proven experience. An inexperienced provider can later lead to higher costs and legal issues. Recommended are full-service agencies specializing in online marketing and accessibility, offering both technical and strategic consulting.

Why is developmental counseling so important?

Support in the development of accessible digital services

Accessibility must be considered from the very beginning to ensure sustainable and efficient implementation. An experienced consultant during the development phase helps identify and avoid errors early on. This allows technical and design measures to be purposefully integrated without the need for costly revisions later. It saves time and money while ensuring high quality.

Benefits of working with experienced experts

Service providers with many years of expertise use proven methods and tools for digital accessibility. They are well-versed in legal requirements and offer practical, solution-oriented approaches. This ensures smooth implementation and reduces legal risks.

Why is legal advice indispensable?

What role does legal assistance play at the BFSG?

The Accessibility Act is a law with clear obligations and consequences for non-compliance. Legal consultation ensures that companies correctly interpret and implement the requirements. Lawyers specialized in digital accessibility help identify liability risks and clarify legal issues. They also assist in preparing for audits or complaints.

When should companies seek legal advice?

Ideally, consulting takes place before the start of technical implementation. This allows potential conflicts or uncertainties to be addressed early on. At the same time, it ensures the strategy is built on a legally sound foundation.

What potential does accessibility offer beyond the law?

Why should companies see accessibility as an opportunity and not just an obligation?

Many companies view accessibility as a burdensome obligation. But there is far more potential behind it. An accessible website improves the user experience for all users, not just for people with disabilities. This increases customer satisfaction and promotes a positive brand perception.

How can accessibility open up new target groups?

People with disabilities represent a large and often underestimated consumer group. Accessible digital services actively engage and include these target audiences. In addition, older individuals and users with temporary limitations also benefit — such as those with poor internet connections or outdated hardware.

What role does accessibility play in online marketing?

Accessibility is considered a mark of quality and fosters trust and reputation. Companies that view accessibility as an integral part of their online marketing stand out from the competition. At the same time, accessibility improves SEO, as search engines better recognize and evaluate accessible content.

Conclusion: Accessibility as a strategic factor

The Accessibility Strengthening Act presents companies with new challenges and opportunities. A structured start with thorough testing, ongoing experienced consulting, and legal support is crucial for success. Those who see accessibility not just as an obligation but as an opportunity improve the user experience, gain more customers, and strengthen their brand in the long term.

As a full-service agency, we support you with expertise in the implementation of the BFSG. With experience in online, email, and performance marketing, we help you firmly anchor digital accessibility in your strategy.

Contact us today to create an inclusive and successful digital presence together. Barrier-free communication is future-oriented and strengthens your competitive position. We look forward to hearing from you!